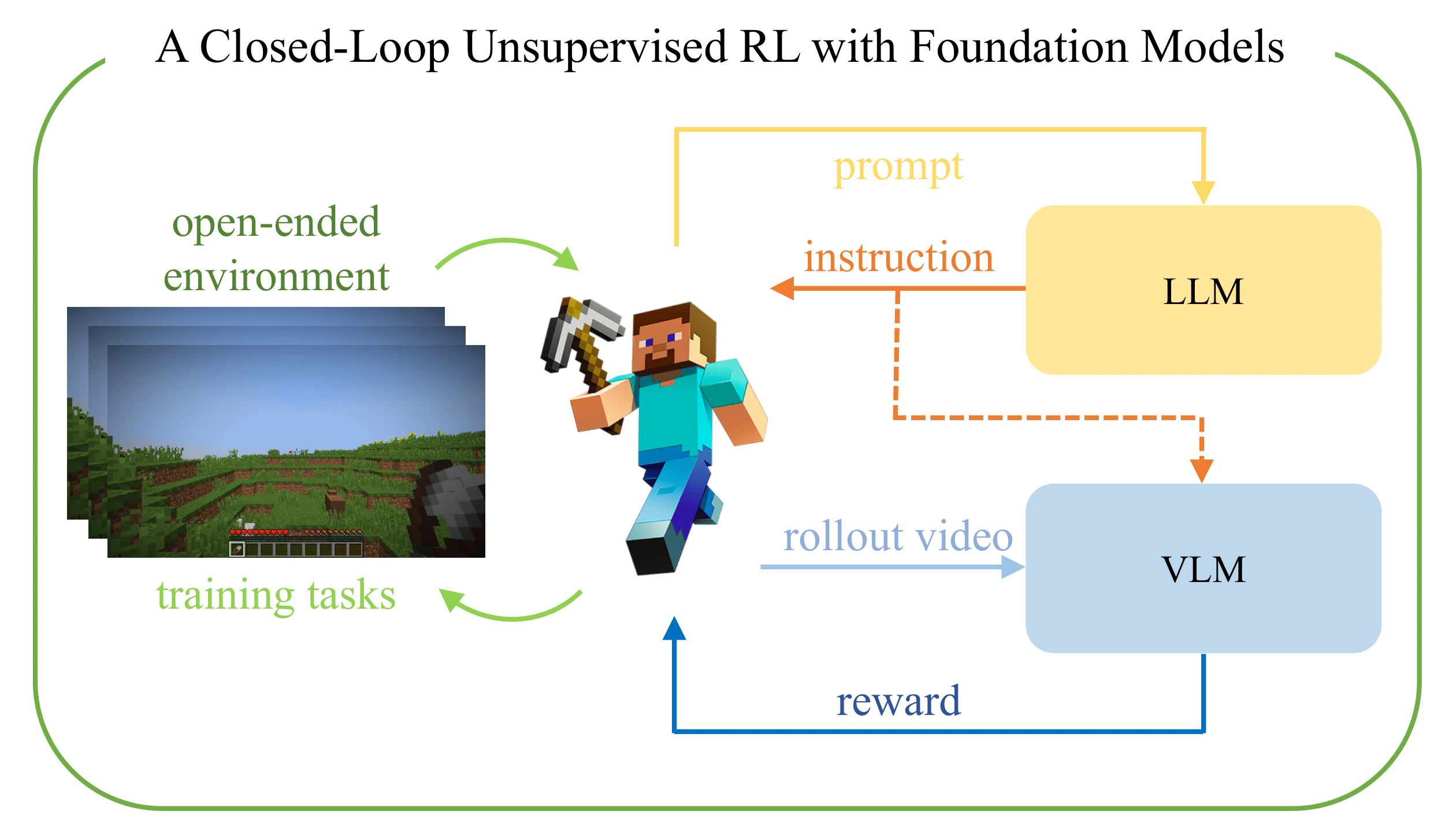

We propose LiFT (unsupervised Learning with Foundation model Teachers) framework that leverages foundation models as teachers, guiding a reinforcement learning agent to acquire semantically meaningful behavior without human feedback.

LiFT Framework

LiFT automates the acquisition of semantically meaningful visuomotor skills with the guidance of foundation models (FMs) for reinforcement learning (RL) agents. Our framework consists of two phases: 1) task instruction proposal with the large language model (LLM) and 2) multi-task language-conditioned policy learning with the guidance of the vision-language model (VLM).

1. LLM Task Instruction Proposal

LLM is used to generate a grounded set of useful imagined task instructions. For example, if the agent is in front of a cow with a bucket in its hand, LLM can propose a task of "milk a cow".

2. VLM-Guided Policy Learning

VLM is leveraged to compute the reward measuring the alignment score between the video rollout and corresponding language instructions, for training a multi-task policy that performs semantically meaningful behaviors.

On Open-ended Environment

We verify our method in a challenging open-ended MineDojo environment. We compare our method with conventional unsupervised RL algorithms with the entropy-maximizing objective.

Success Demonstrations

Below images showcase exemplary skill performances by our agent.

"Shear a sheep"

"Collect logs"

"Attack a sheep"

"Combat a zombie"

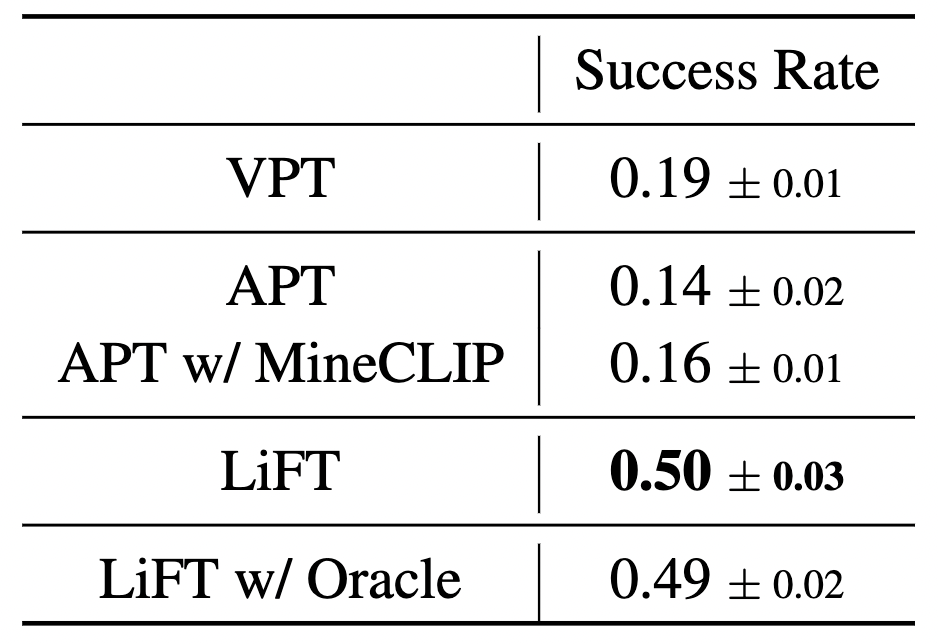

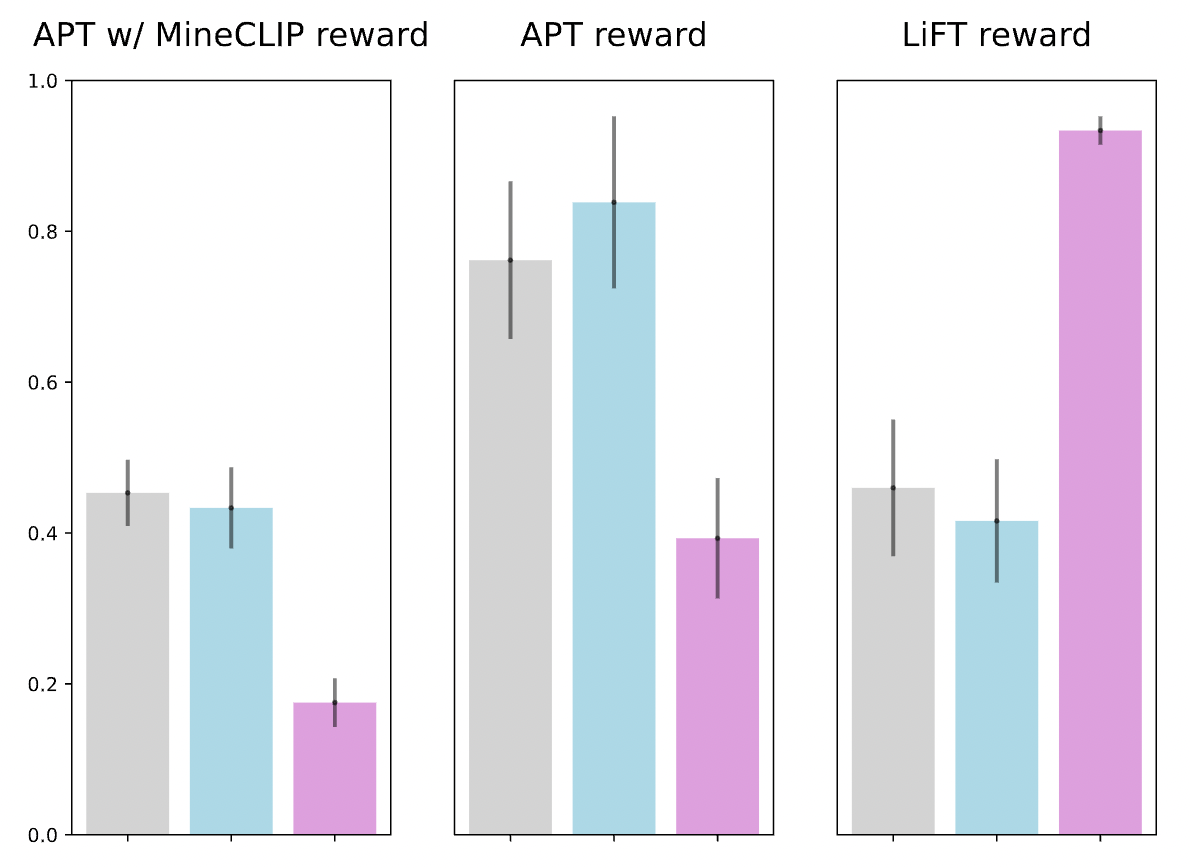

Comparison with Baselines

Our approach achieves outperforming success rates compared to unsupervised RL baselines (APT and APT w/ MineCLIP) and is even comparable with the baseline using oracle task proposal annotated by humans (LiFT w/ Oracle) in the zero-shot evaluation.

LiFT succeeds in training a policy equipped with semantically meaningful behavior. On the other hand, the success rates of unsupervised RL baselines are even less than the VPT baseline.

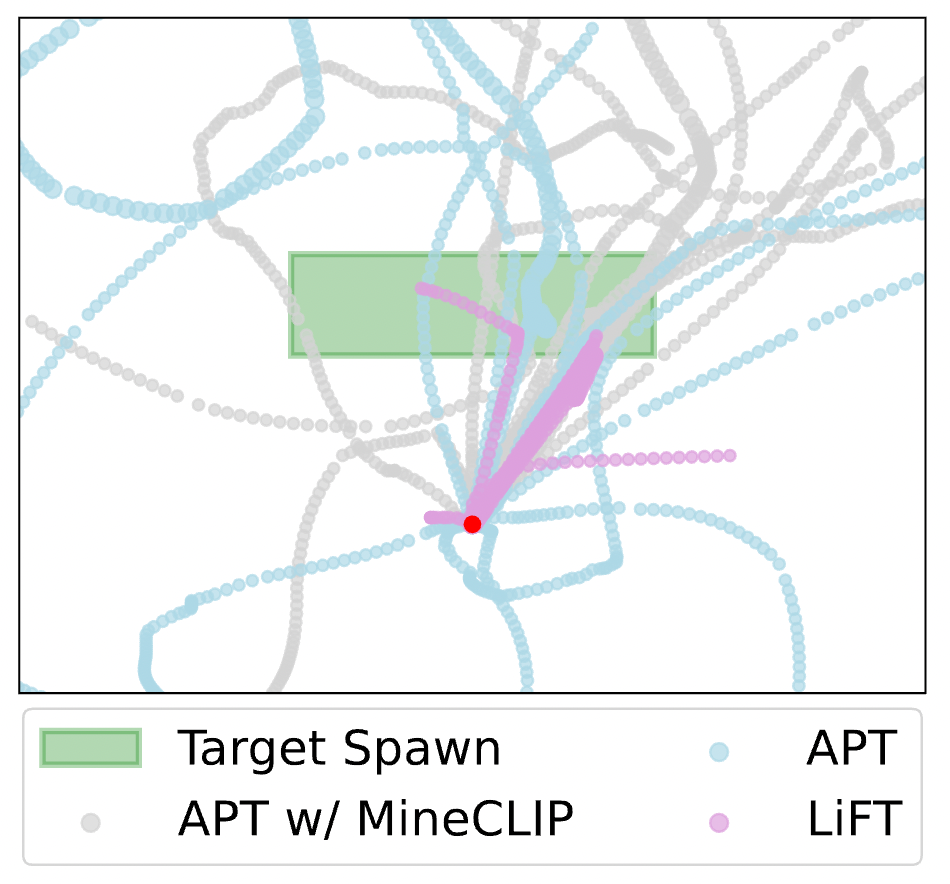

Behavior Analysis

(a) Trajectories in top view

(b) Normalized reward scores

LiFT agents efficiently interact with the target entity, spawned in the green box region in the figure. APT agents, however, do not show semantic meaning but just wander around a wide range of regions to maxime the objective of diversity.

For Scalable Learning

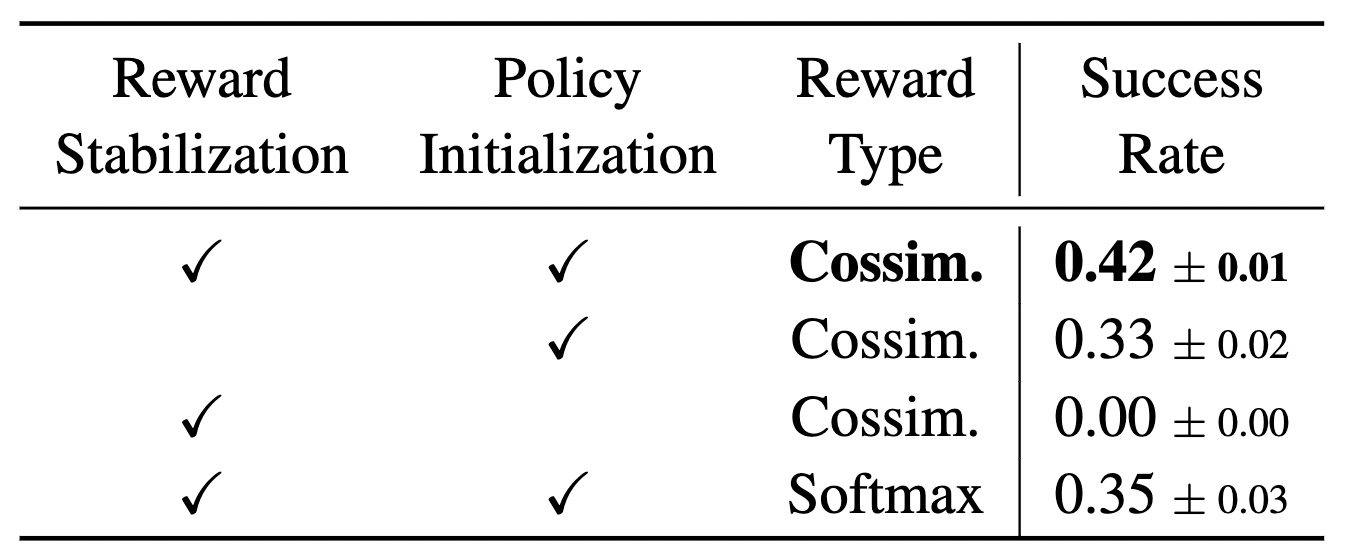

We analyze the limitations of current FMs used in our experiment for scalable learning. To highlight our design choices for tackling the challenge, we present an ablation experiment result.

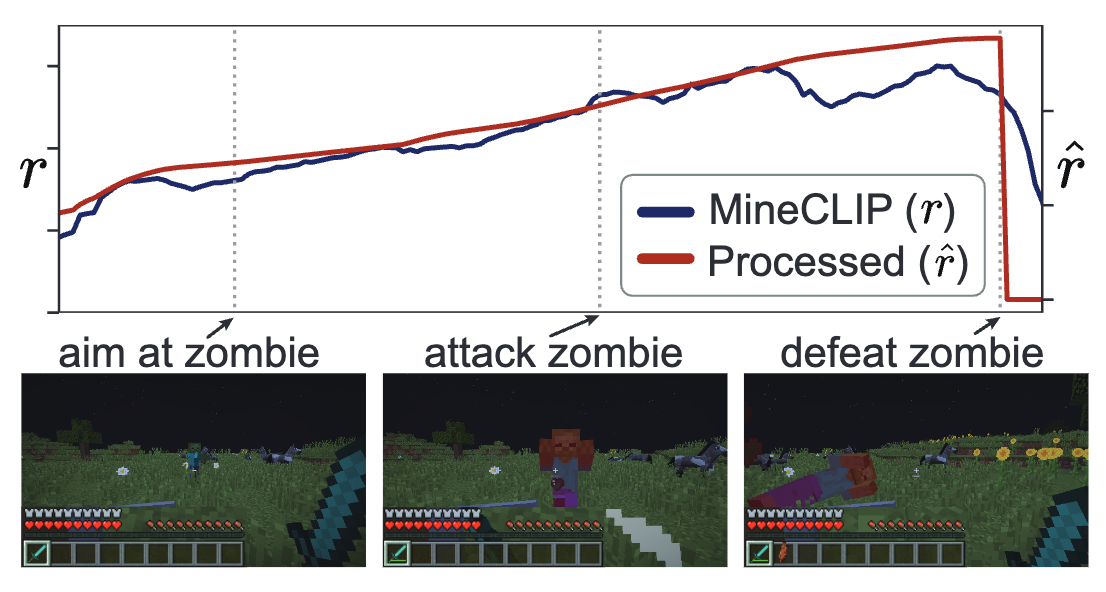

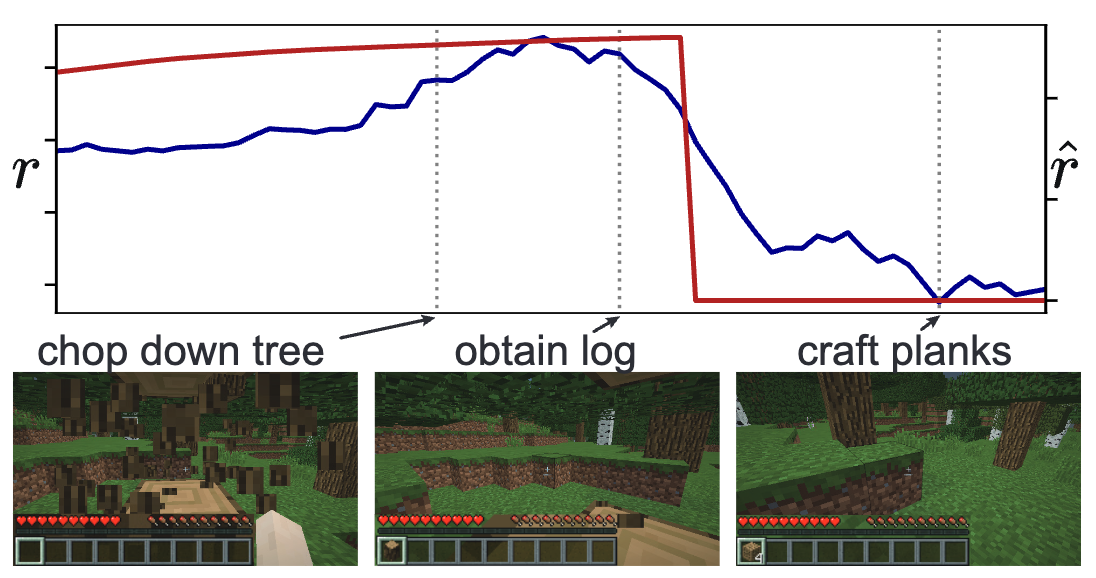

Reward Stabilization

The comparison between the first and second rows in the table shows the effect of reward stabiliziation. The below graphs show the difference between the raw VLM reward signals and the processsed version.

(a) Combat a zombie

(b) Craft planks

Policy Initialization

The comparison between the first and third rows in the table shows the effect of policy initialization. A randomly initialized policy can not learn properly, mainly due to the high complexity of MineDojo environment and the imperfect VLM rewards.

Reward Type

We additionally experiment on two types of reward definitions: cosine similarity and softmax. We conclude that the naive application of softmax reward does not efficiently improve the performance.

BibTeX

@inproceedings{

nam2023lift,

title={LiFT: Unsupervised Reinforcement Learning with Foundation Models as Teachers},

author={Taewook Nam and Juyong Lee and Jesse Zhang and Sung Ju Hwang and Joseph J Lim and Karl Pertsch},

year={2023},

booktitle = {2nd Workshop on Agent Learning in Open-Endedness (ALOE) at NeurIPS 2023},

}